Back to The EDiT Journal

A Solution Architect’s Guide: Multi-Account AWS Deployments [Part 1: Foundations]

Part 1 of a two-part guide for cloud architects, DevOps engineers, and technical leaders on automating multi-account AWS deployments with speed and security.

Cloud & Infrastructure

Tech Teams

In this article

The Challenge: Multi-Account AI Platform Delivery

Step 1: Build the Foundation with Terraform

Step 2: Bootstrap New AWS Accounts

Step 3: Modernize your Architecture

Step 4: Extend with AI/ML and Serverless Architecture

Step 5: Standardize CI/CD with GitHub Actions

Key takeaways

Managing infrastructure across multiple AWS accounts remains one of the most persistent challenges for organizations operating at scale. Manual processes—whether spinning up EC2 instances or deploying applications—introduce inconsistency, slow down delivery, and increase operational overhead. These inefficiencies create bottlenecks that limit agility and raise operational risk.

This is Part One of a two-part guide, diving into how to move from manual, error-prone infrastructure to a fully automated, scalable deployment pipeline powered by AWS, Terraform, and GitHub Actions.

Designed for cloud architects, DevOps engineers, and technical leaders, this guide outlines the architectural decisions, technical patterns, and best practices required to build repeatable CI/CD workflows that provision complete environments across multiple AWS accounts—securely and in minutes.

The ability to scale infrastructure securely and efficiently isn’t just a technical challenge, it’s what enables organizations to deliver solutions that are reliable, accessible, and future-ready, whether that be in EdTech, or any sector where technology drives meaningful impact. At EDT&Partners, we see automation and cloud strategy as more than engineering efficiency; they are examples of using technology with purpose to drive meaningful transformation at scale.

The Challenge: Multi-Account AI Platform Delivery

Designing and implementing a scalable AI platform across multiple AWS accounts comes with a familiar set of goals:

- Create a fully automated, production-ready AI platform

- Enable rapid deployment to new customer accounts

- Maintain security and isolation between environments

- Provide a smooth handover process to customers

- Support ongoing updates through secure cross-account access

The architectural challenge is significant: design a system that can be deployed consistently across different AWS accounts while maintaining security best practices and enabling seamless updates through AWS Organizations.

The Solution: AWS Services & Modern DevOps Practices

Building a scalable, secure, and maintainable platform across multiple AWS accounts requires a deliberate combination of AWS services and modern DevOps practices.

Core Infrastructure Components

- AWS Organizations for multi-account management

- AWS IAM for identity and access management

- AWS VPC for network isolation

- AWS ECS for container orchestration

- AWS RDS for managed databases

- AWS Secrets Manager for secrets management

Key Architectural Decisions

- Infrastructure as Code (IaC) using Terraform for reproducibility

- CI/CD Pipeline with GitHub Actions for automated deployments

- Container Orchestration with ECS for scalability

- Integration Testing with Cypress for reliability

- Secrets Management using AWS Parameter Store and Secrets Manager

.png)

Initial State: Manual, Risky, and Slow

Many organizations still rely on manual infrastructure setups that look something like this:

- Infrastructure created manually in the AWS Console

- Each new environment or account looks slightly different, making debugging a nightmare

- No consistent deployment procedures or rollback strategies

- Inability to deliver new environments on time.

- No testing in place

- CI/CD pipelines limited to pushing images to repositories, with no automated deployment

These challenges are common—and they highlight the need for a more strategic approach. The goals in moving forward are clear:

- Defining infrastructure as code

- Automating deployment using CI/CD

- Enabling scalable provisioning of isolated environments per customer

- Automating updates of an existing environment

- Adding testing to our Pipelines

- Adding security to our Pipelines

- Enabling integration testing for provisioned environments

- Documenting processes and standardizing the code for secure variable usage

- Removing hardcoded secrets from the code

- Removing access credentials from the runtime. Use profiles

- Adding Scalability by moving to a serverless approach

- Enhancing system reliability by introducing autoscaling methodologies

- Removing blueprint by using on-demand compute and introducing serverless technologies such as Aurora Serverless, Fargate, and Lambdas

The following steps outline how to move from this manual, error-prone state to a secure, automated, and scalable multi-account deployment strategy.

Step 1: Build the Foundation with Terraform

Your first step toward automation should be establishing a solid infrastructure foundation. Here’s how you can approach it:

- Created reusable modules for core services:

vpc,ecs,rds,s3, etc. - Introduced remote state using S3 and DynamoDB for state locking

- Set up workspaces for

dev,staging, andprod - Set up patterns to create similar services by using arrays, which allows us to scale our microservice strategy.

module "lecture_ecs_services" {

source = "./terraform-ecs-service-module"

for_each = { for idx, config in local.ecs_config : idx => config }

project = var.project

vpc_id = module.lecture_vpc.vpc_id

region = var.aws_region

alb_dns_name = module.lecture_ecs_alb.alb_dns_name

db_connection_arn = module.rds_cluster.lecture_database_connection_secret_arn

repository_name = each.value.repository_name

Common Problems You’ll Face

- Manual infrastructure creation leading to inconsistencies

- No version control for infrastructure changes

- Lack of standardization across environments

- Difficult tracking and managing infrastructure state

- High risk of human error in deployments

How to Solve them

- Integrate DevOps Best Practices

- Use Infrastructure as Code using Terraform modules

- Keep all infrastructure changes under version control

- Standardize deployment patterns across environments

- Centralize state management with S3 and DynamoDB

- Automate infrastructure provisioning

Step 2: Bootstrap New AWS Accounts

The next step is to make sure every new AWS account starts off fully operational and consistent. Instead of setting things up manually each time, you can bootstrap accounts with repeatable, automated processes.

Here’s how to do it:

- Create an isolated infrastructure with Terraform following best practices.

- Set up IAM, VPC, ECS, RDS and monitoring

- Use GitHub Actions to provision and deploy

To streamline this further:

- Reusable modules and per-user variable files

- Bootstrap scripts for repeatability

./scripts/bootstrap.sh --account new-user --region us-east-1Common Problems You’ll Face

- Time-consuming manual account setup process

- Inconsistent configurations across new accounts

- No standardized handover process

- Risk of missing critical setup steps

- Complex multi-account management

How to Solve Them

By integrating DevOps Best Practices:

- Automated bootstrap process for new accounts

- Standardized account configurations

- Repeatable infrastructure provisioning

- Simplified account handover process

- Consistent monitoring and security setup

Step 3: Modernize your Architecture

Once you’ve automated the basics, the next step is to modernize your architecture so it scales efficiently and keeps costs under control. A serverless-first approach allows you to reduce your EC2 footprint, pay only for what you use, and add AI/ML capabilities on top.

Here’s how you can approach modernization:

- Container Orchestration:

- Migrate from EC2/docker-compose to ECS

- Implemente automated deployment strategies

- Enable dynamic scaling capabilities

- Serverless Components

- AWS Lambda for event-driven processing

- Step Functions for workflow orchestration

- API Gateway for serverless APIs

- AI/ML Integration:

- Amazon Bedrock for AI capabilities

- Knowledge bases for documentation

- OpenSearch for intelligent search

- Security and Configuration

- Secret injection via Parameter Store

- Runtime configuration management

- Cross-account access controls

Architecture Proposal

.png)

Common Problems You’ll Face

- High operational costs with EC2 instances

- Limited scalability with docker-compose

- Manual container orchestration

- Static resource allocation

- Complex deployment processes

How to Solve Them

By integrating DevOps Best Practices:

- Migrate to container orchestration with ECS

- Automate scaling based on demand

- Improve resource utilization

- Cost optimization through serverless components

- Streamline deployment processes

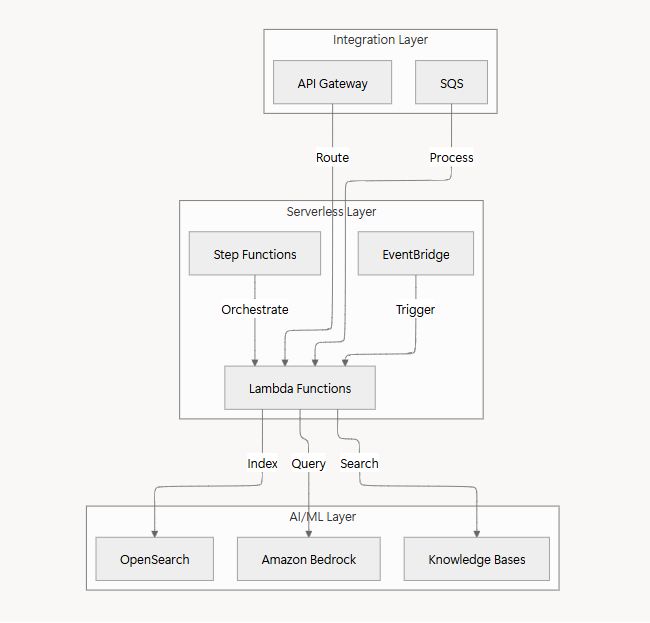

Step 4: Extend with AI/ML and Serverless Architecture

Architecture Diagram

Once your core infrastructure is automated and modernized, the next step is to extend your platform with AI/ML capabilities and serverless workflows. This helps you scale intelligently, reduce operational overhead, and deliver smarter features to end users.

AI/ML Integration with AWS Bedrock

- Foundation Models: Leveraging Amazon Bedrock for:

- Text generation and summarization

- Code analysis and documentation

- Natural language processing tasks

- Custom Model Deployment: Using SageMaker endpoints

- AI-Powered Features:

- Automated code review suggestions

- Documentation generation

- Intelligent monitoring alerts

Knowledge Base Management

- OpenSearch Service:

- Centralized documentation repository

- Full-text search capabilities

- Real-time indexing and updates

- Vector Embeddings:

- Semantic search functionality

- Similar document recommendations

- Content clustering

Serverless Workflow Orchestration

- AWS Step Functions:

- Complex deployment workflows

- Error handling and retry logic

- State machine management

- Parallel execution of tasks

Lambda Functions

- Event-Driven Processing:

- Repository webhook handling

- Log processing and analysis

- Scheduled maintenance tasks

- Integration Points:

- API Gateway backends

- Custom resource handlers

- Cross-account operations

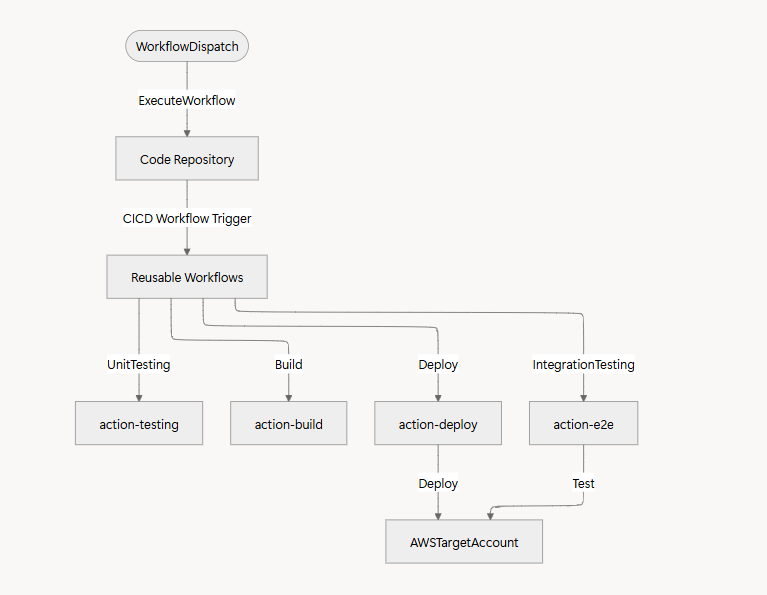

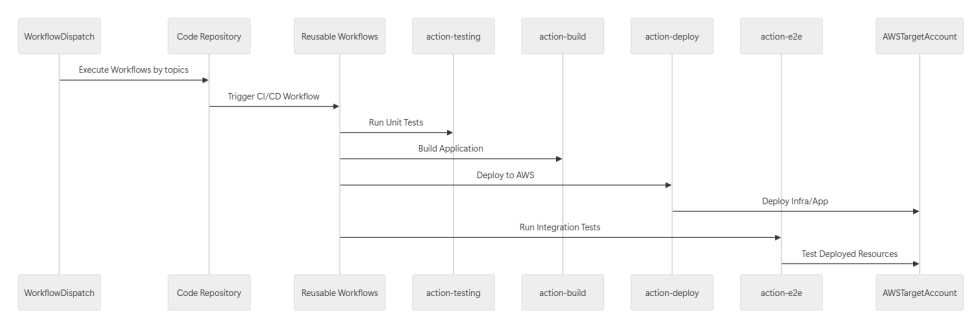

Step 5: Standardize CI/CD with GitHub Actions

As you move toward a microservices approach, you’ll need consistent patterns and reusable code to manage deployments effectively. GitHub Actions can help you standardize and scale your CI/CD strategy with reusable workflows and composite actions.

Github Reusable Workflow Strategy

Triggering Workflows

We centralize our deployments from a reusable workflow dispatch that executes the workflows of our repositories that needs to be deployed as part of our architecture by filtering using topics.

repos=$(curl -s \

-H "Authorization: Bearer ${{ secrets.GH_TOKEN }}" \

"https://api.github.com/orgs/${{ github.repository_owner }}/repos?type=all&per_page=100" | \

jq -r ".[] | select(.topics | index(\"${{ inputs.tag }}\")) | .name")

Repository Structure

The usage of Environments is a key component to have our users’ variables mapped.

This allows us to have a way to inject variables into our workflows at runtime.

name: 'Build Application Process'

on:

workflow_call:

inputs:

user:

required: true

type: string

env:

AWS_ACCOUNT_ID: ${{ vars.AWS_ACCOUNT_ID }}

AWS_REGION: ${{ vars.AWS_REGION }}

jobs:

deploy:

environment: ${{ inputs.user }}

runs-on: ubuntu-latest

permissions:

id-token: write

contents: read

steps:

- name: ✨ Checkout ✨

uses: actions/checkout@v4

- name: ⚙️ Setting up Environment Variables ⚙️

uses: EDT-Partners-Tech/action-build@main

Secrets Management

Each customer has isolated credentials stored as Secrets using Secrets Manager and Parameter Store. This secrets are never queried from outside the cloud environment.

Common Problems You’ll Face

- No standardized SDLC for Application Deployment

- Lack of version control and tracking

- Duplicate code across repositories

- Insecure secrets management

- Complex deployment coordination across services

- No consistent workflow between environments

How to Solve Them

By integrating DevOps Best Practices

- Centralize deployment strategy with reusable workflows

- Standardize SDLC following industry best practices

- Automate build and deployment pipelines

- Secure secrets management with AWS and GitHub

- Environment-based configuration management

- Streamline developer experience with composite action

Key takeaways

Automation transforms multi-account AWS management from a manual burden into a scalable, secure, and repeatable process—enabling faster deployments, stronger security, and greater capacity for innovation.

Through Infrastructure as Code, modern CI/CD practices, and AWS-native services, organizations can evolve from error-prone setups to fully automated, production-ready platforms that scale across accounts. This shift standardizes deployments, enhances security, reduces costs with serverless-first strategies, and creates the foundation for integrating AI/ML capabilities.

To explore the next phase—go to: A Solution Architect’s Guide: Multi-Account AWS Deployments [Part 2: App Deployment & Scaling].

.png)

.png)